Last mod: 2025.02.02

OpenAI/ChatGPT API - "Hello world" example

Integrating your systems with the OpenAI API unlocks a world of possibilities, from automating complex tasks to enhancing user interactions with AI-driven insights. With natural language processing, code generation, and intelligent data analysis, OpenAI enables smarter decision-making and greater efficiency. Whether you’re improving customer support, optimizing workflows, or developing cutting-edge applications, this integration ensures seamless scalability and adaptability. In this article, you'll learn how to quickly configure and connect your system to the OpenAI API.

Create and configure OpenAPI account

- Go to page https://platform.openai.com/. Create an account and log in.

- Fund your OpenAI API account with a payment https://platform.openai.com/settings/organization/billing/overview

- Generate your unique API key (OPENAI_API_KEY) at https://platform.openai.com/api-keys. Save your key and do not show it to unauthorized people. In the application you are building, you must have this key on the backend side, preferably encrypted. It's important that users don't have any access to this key!

"Hello world" request

We are preparing our "Hello World" request. To see if the API works as we expect, we'll ask a slightly more difficult question: "What is two multiplied by five?"

We're going to use the curl command to call the request API:

curl https://api.openai.com/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4o-mini",

"messages": [{"role": "user", "content": "What is two multiplied by five?"}],

"temperature": 0.0

}'

Where:

- $OPENAI_API_KEY - Is the key generated as described above.

- "model": "gpt-4o-mini" - Model version ChatGPT. Depending on the model selected depends on the quality of the response and the fees/tokens charged.

- "role": "user" - There are three main roles in chat-based requests when interacting with models like GPT: system, user, assistant. Role user represents messages from the user.

- "content": "What is two multiplied by five?" - Our question, which we send to the system and for which we expect an answer.

- "temperature": 0.0 - Is a parameter that controls the randomness of the model's responses. It determines how deterministic or creative the model's output will be. For mathematical calculations recommend setting temperature is 0 or 0.1.

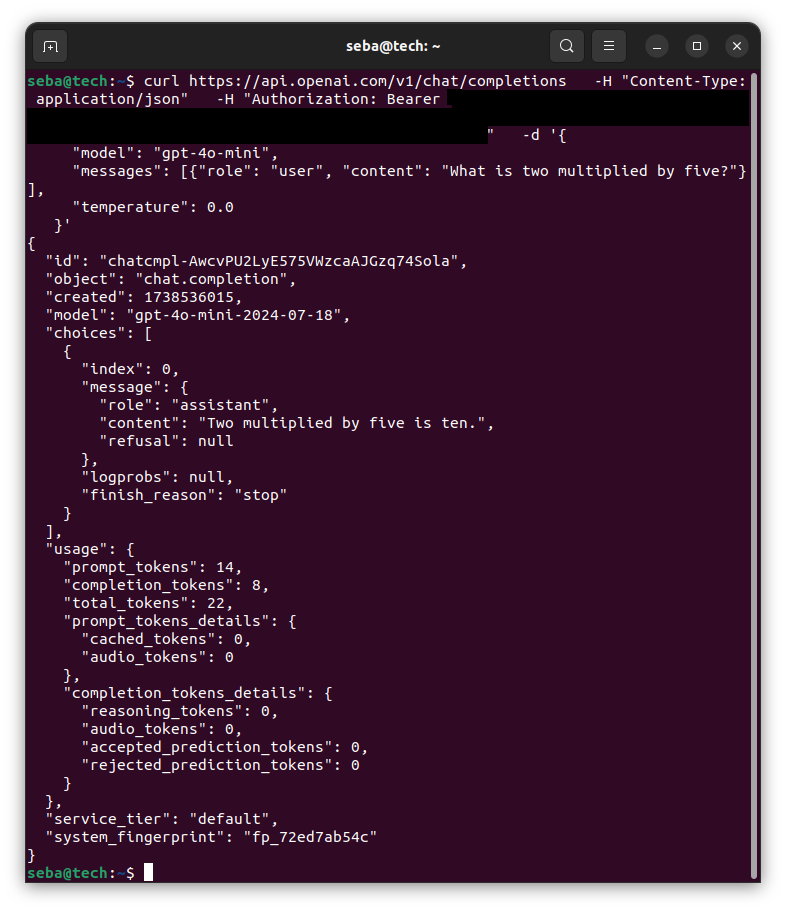

If everything went well we should see an effect similar to the one below:

The important value for us in the returned JSON is:

"content": "Two multiplied by five is ten."

As we can see, this is the correct answer to our question.