Last mod: 2025.04.26

Ollama/DeepSeek local - "Hello world" example

Hardware and software

The example is run on:

- CPU i7-4770K

- 32GB RAM

- Ubuntu 24.04 LTS Server

- Python 3.12

It is a rather old configuration and slow, but nevertheless allows even the deepseek-r1:32b model to run.

Install and run console

Install Ollama:

curl -fsSL https://ollama.com/install.sh | sh

Run deepseek-r1:7b model:

ollama run deepseek-r1:7b

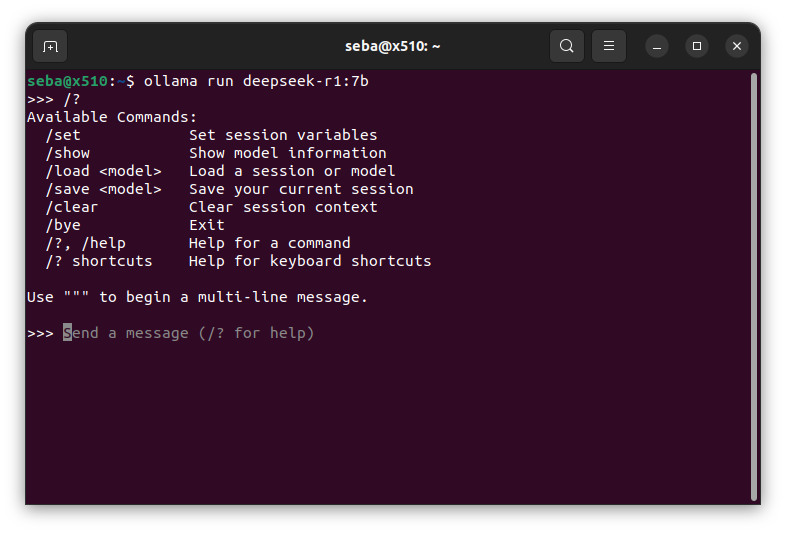

Commands list:

/?

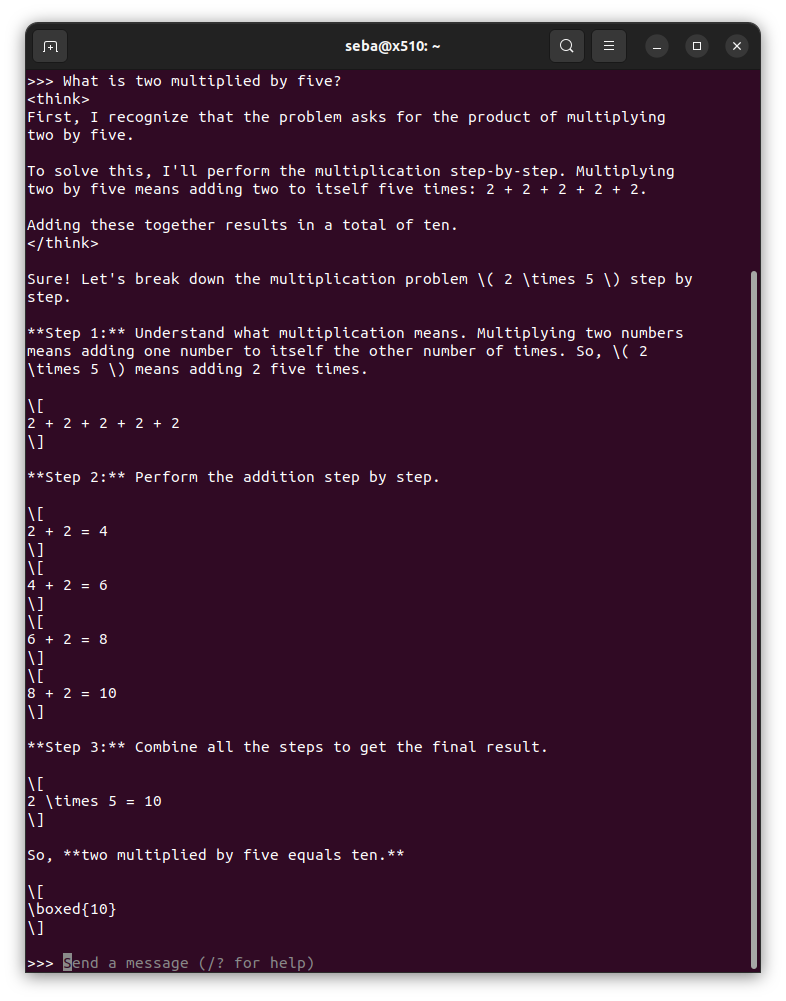

Let us ask a simple question "What is two multiplied by five?":

Finally, we can read from the console:

So, two multiplied by five equals ten.

10

Ollama as a service

After installation, Ollama should work as a service, which we can check:

sudo systemctl status ollama

If for some reason it doesn't work we can try running:

sudo systemctl start ollama

sudo systemctl enable ollama

Access via web interface

Prepare python3 environment:

sudo apt install python3-venv

python3 -m venv ~/open-webui-venv

source ~/open-webui-venv/bin/activate

Install and run Open WebUI:

pip install open-webui

open-webui serve

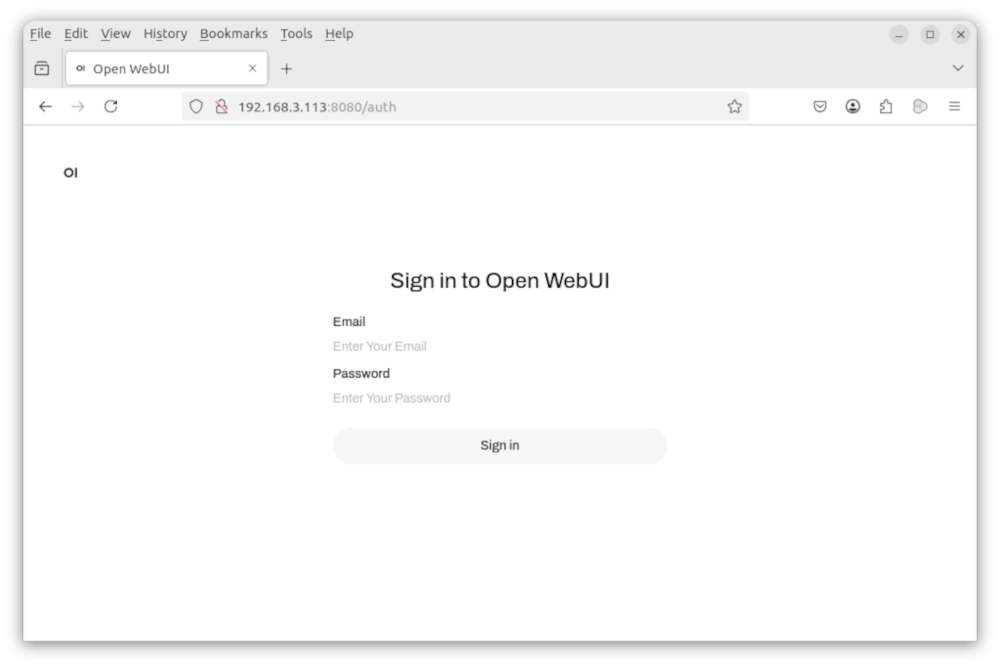

Open the address http://HOST_WITH_OPEN_WEB-UI:8080 in your browser. Create account and sign in:

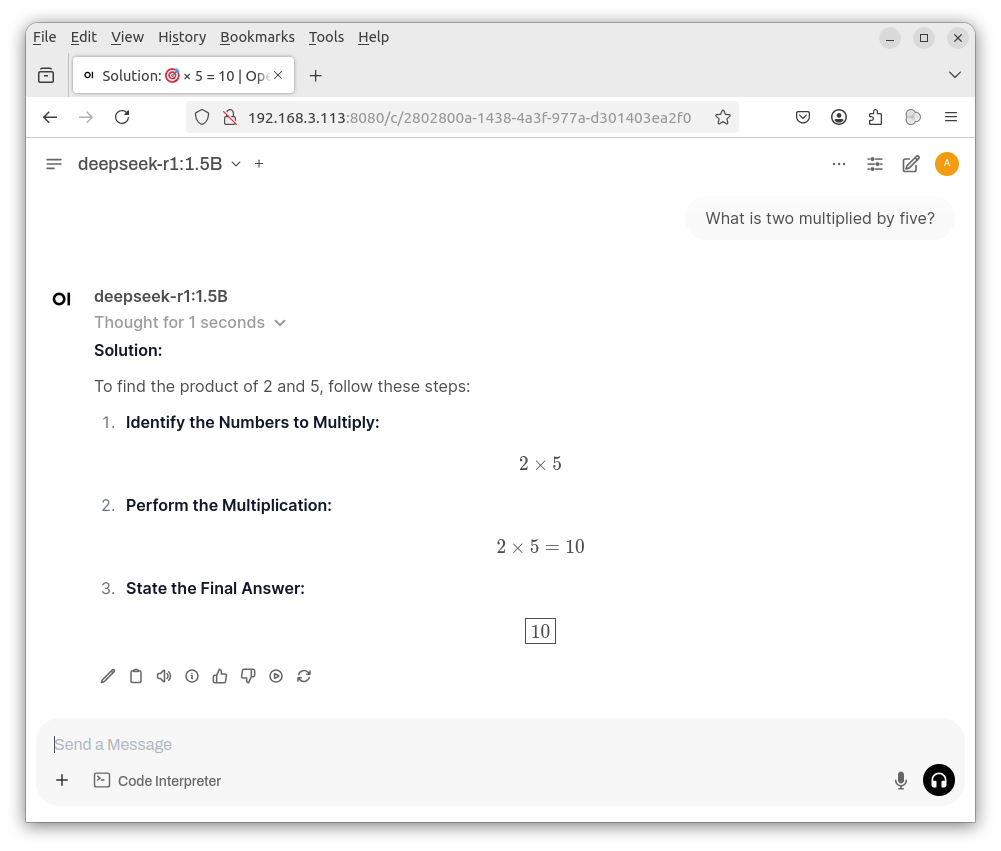

Let us ask again about "What is two multiplied by five?":

Open WebUI as a service

Create file:

sudo vi /etc/systemd/system/open-webui.service

With body (replace <your-username>):

[Unit]

Description=Open-WebUI Service

After=network.target

[Service]

User=<your-username>

Group=<your-username>

WorkingDirectory=/home/<your-username>

ExecStart=/bin/bash -c 'source /home/<your-username>/open-webui-venv/bin/activate && exec open-webui serve'

Restart=always

RestartSec=5

Environment="PATH=/home/<your-username>/open-webui-venv/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin"

StandardOutput=append:/var/log/open-webui.log

StandardError=append:/var/log/open-webui.log

[Install]

WantedBy=multi-user.target

Start and enable:

sudo systemctl start open-webui

sudo systemctl enable open-webui

Verify:

sudo systemctl status open-webui

If everything went well, after restarting we will have the page available http://HOST_WITH_OPEN_WEB-UI:8080

Popular SLM and LLM models

Ollama is an open-source platform for running large-scale language models (SLM, LLM) locally on a computer. It allows users to download, manage and run various AI models without relying on cloud services, ensuring privacy and offline availability.

We can install other models, e.g. SpeakLeash/bielik-11b-v2.3-instruct:Q4_K_M:

ollama pull SpeakLeash/bielik-11b-v2.3-instruct:Q4_K_M

Some examples of models that we can run on a regular workstation:

| Model | Size [GB] |

|---|---|

| SpeakLeash/bielik-11b-v2.3-instruct:Q4_K_M | 6.7 |

| mistral:latest | 4.1 |

| deepseek-r1:1.5B | 1.1 |

| deepseek-r1:latest | 4.7 |

| deepseek-r1:32b | 19 |

| deepseek-r1:14b | 9.0 |

| deepseek-r1:7b | 4.7 |

| wizard-vicuna-uncensored:30b | 18 |

| wizardlm-uncensored:latest | 7.4 |

| wizardlm-uncensored:latest | 7.4 |

| qwen2.5-coder:latest | 4.7 |

Links

https://github.com/ollama/ollama

https://openwebui.com/

https://github.com/QwenLM/Qwen2.5-Coder