Last mod: 2024.12.14

Installing Apache Spark

Software

- Ubuntu 24.04 LTS

- OpenJDK 17

- Spark-3.5.3-bin-hadoop3

Naturally, you can run on another OS and on other versions. I specify for ease of configuration.

Installation

Installing OpenJDK 17:

sudo apt install openjdk-17-jdk-headless

Before download and unpack Spark, it is necessary to ensure that the /opt directory has the correct permissions.

Download and unpack:

cd /opt/

wget https://dlcdn.apache.org/spark/spark-3.5.3/spark-3.5.3-bin-hadoop3.tgz

tar -xvzf spark-3.5.3-bin-hadoop3.tgz

These commands need to be repeated on each node to be part of the cluster.

On the selected single host, we launch the master instance:

cd /opt/spark-3.5.3-bin-hadoop3/sbin

./start-master.sh -h MASTER_IP_OR_DNS

Spark opens two ports:

- 7077 - to communicate with the nodes

- 8080 - control panel accessible via HTTP

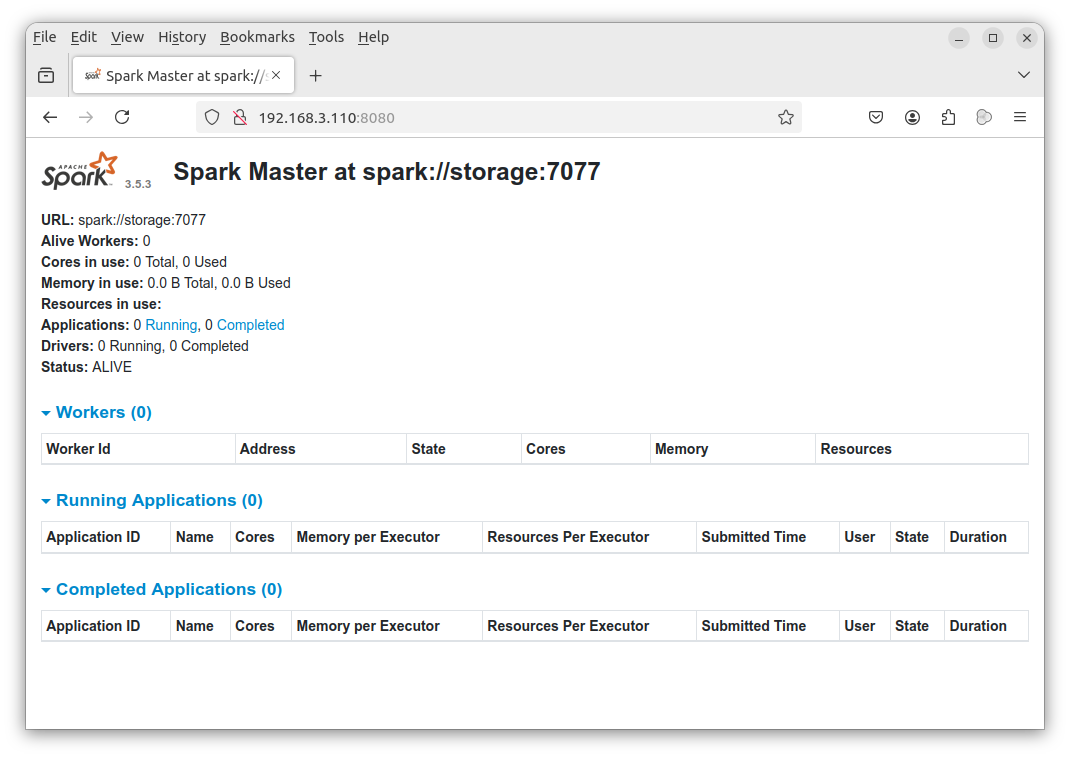

We can view the status of the cluster by typing in the browser:

http://SPARK_MASTER_IP:8080/

Next, install on all nodes: OpenJDK 17, unpack Spark and run:

cd /opt/spark-3.5.3-bin-hadoop3/sbin

.//start-slave.sh spark://MASTER_IP_OR_DNS:7077

Replace the MASTER_IP_OR_DNS value with the master IP address or DNS name.

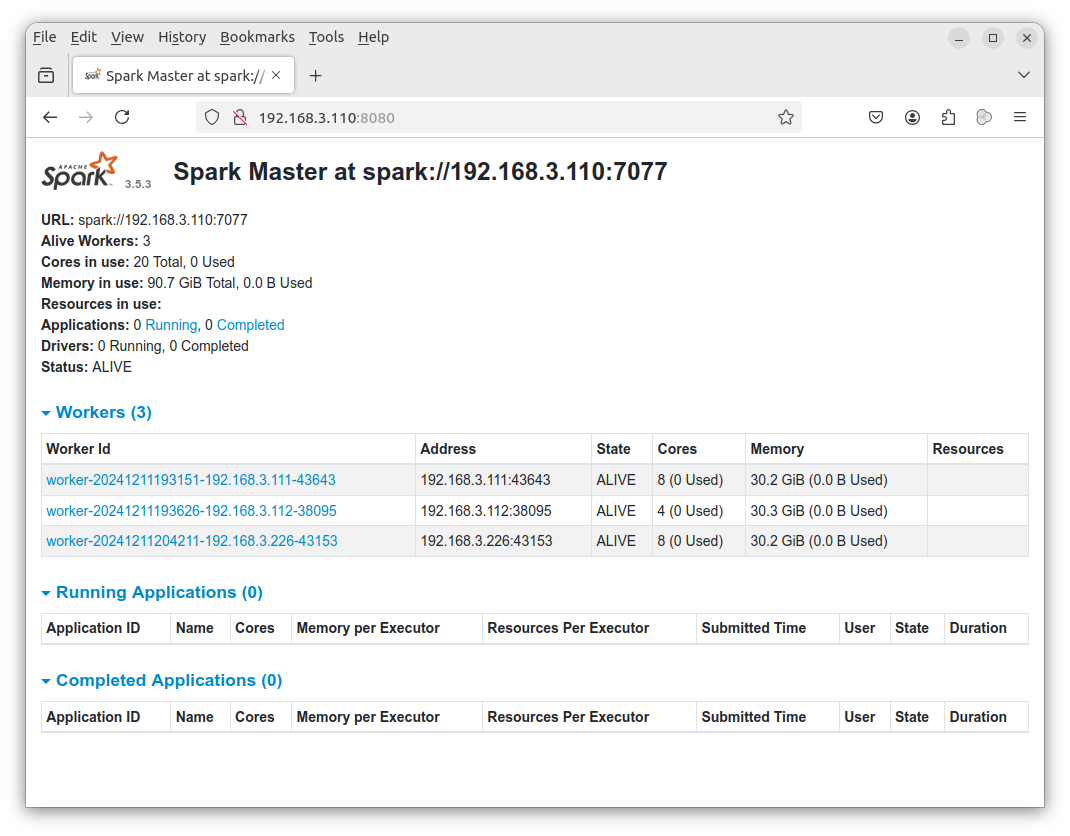

After starting all nodes, we can refresh the master panel and check the status of the connected nodes:

Links

https://openjdk.org/

https://spark.apache.org/downloads.html

https://www.apache.org/dyn/closer.lua/spark/spark-3.5.3/spark-3.5.3-bin-hadoop3.tgz

To be continued...